The Algorithmic Ego: Data Doubles and the Illusion of Self

The digital self, a phantom echo of our existence, is subtly taking control. This digital twin, a meticulously crafted replica woven from the threads of our online activity, is not merely a reflection but a powerful shaper of our reality. Understanding this evolving dynamic requires delving into the philosophical depths of Jean Baudrillard's hyperreality and Shoshana Zuboff's analysis of surveillance capitalism.

The genesis of this control lies in the relentless data collection that characterizes the modern age. Every click, purchase, and social interaction contributes to the construction of our digital selves, creating a profile that, for many, has become more influential than their lived experience. This essay will explore how these digital doubles, crafted from our data, exert a profound influence on our actions, choices, and even our sense of self.

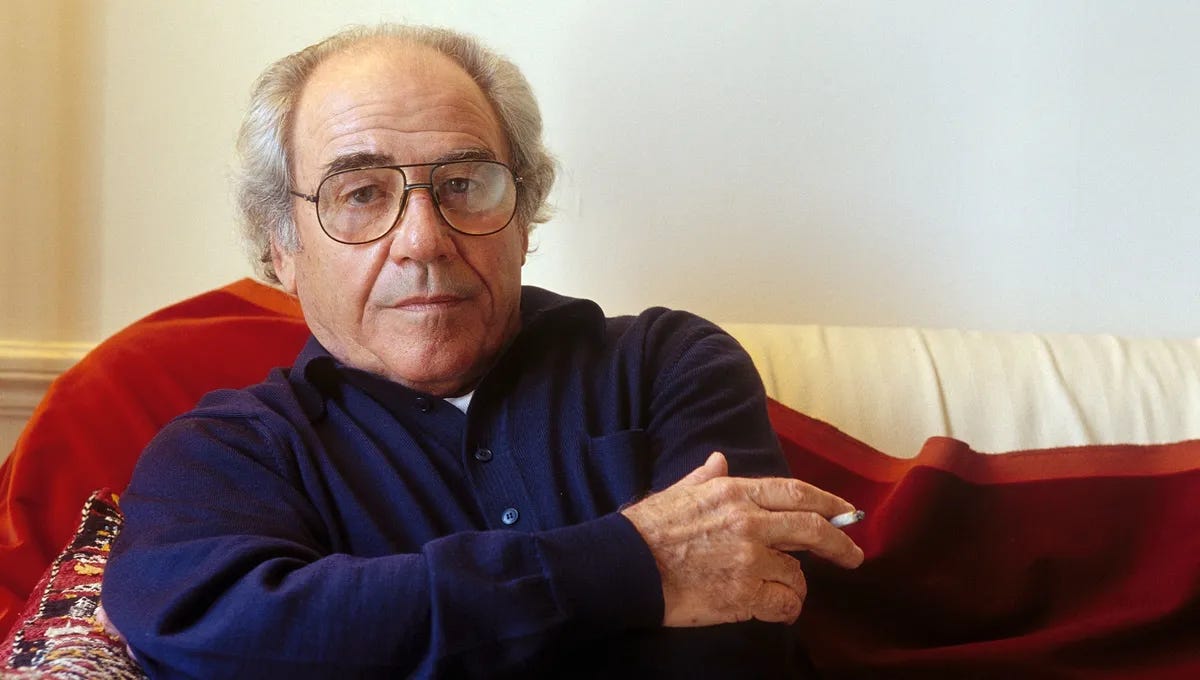

Our journey begins with Baudrillard's groundbreaking concept of simulacra and simulation. He argued that in postmodern society, the "real" has been replaced by simulations, where the copy precedes the original (Baudrillard, 1981). Applied to our digital twins, this suggests that the data profile—our simulated self—gains precedence, shaping our understanding of ourselves and guiding our actions. This can lead to a blurring of lines and a distorted perception of reality.

"Simulation is no longer a territory, a referential being, or a substance. It is the generation by models of a real without origin or reality: a hyperreal."

— Jean Baudrillard, Simulacra and Simulation (1981)The essay will then transition to an examination of predictive algorithms and how they leverage the data within our digital twins to shape our behaviors. These algorithms, powered by machine learning and artificial intelligence, analyze our past actions to predict future choices. This predictive capability allows for targeted advertising, personalized recommendations, and even subtle nudges that influence our decisions in ways we may not even perceive.

The influence of algorithms is further amplified by the principles of surveillance capitalism, as outlined by Shoshana Zuboff. Zuboff argues that surveillance capitalism involves the commodification of our personal data for profit, leading to a system where corporations actively manipulate our behavior to maximize their financial gains (Zuboff, 2019). This is achieved through techniques like predictive policing, which relies heavily on data analysis to predict criminal activity, and targeted marketing campaigns designed to exploit our vulnerabilities.

"Surveillance capitalism unilaterally claims human experience as free raw material for translation into behavioral data."

— Shoshana Zuboff, The Age of Surveillance Capitalism (2019)A chilling fact is that the average person generates approximately 1.7 MB of data every second (Domonoske, 2019). This staggering volume of data fuels the digital twin, transforming it into an increasingly sophisticated and influential entity.

The power of these digital twins extends beyond mere behavioral prediction. They have the capacity to subtly influence our identity, values, and aspirations. The algorithms within these twins can create "filter bubbles" or "echo chambers," where users are only exposed to information that confirms their existing beliefs (Pariser, 2011). This creates a narrowed view of reality, further reinforcing the simulated self at the expense of critical thinking and diverse perspectives.

This essay aims to delve into the complex interplay between our physical and digital selves, exploring the ways in which our data doubles are subtly influencing our lives. This examination will incorporate relevant philosophical concepts, practical examples, and contemporary research.

Digital Shadows: When Data Defines Us

The digital echo of our existence has become a powerful force, subtly reshaping our world. Our online activities, once simple transactions, have morphed into raw material for the construction of "digital twins"—sophisticated profiles that increasingly dictate our experiences. This essay delves into the philosophical implications of this phenomenon, examining how our data doubles, curated by algorithms and fueled by surveillance capitalism, influence our actions, perceptions, and ultimately, our very identities.

The core argument lies in the idea that our digital representations, built from the constant stream of data we generate, are rapidly becoming more influential than our lived realities. This mirrors Jean Baudrillard’s concept of hyperreality, where the simulation precedes and shapes the real. Our digital twins, meticulously crafted from our online behaviors, begin to dictate our choices, preferences, and even our sense of self. This dynamic leads to a situation where the virtual, the data-driven representation, becomes the primary lens through which we view the world, and the real world is increasingly influenced by this digital construct.

This is best explained by the phenomenon of filter bubbles and echo chambers. Algorithms curate the information we receive, reinforcing existing beliefs and limiting exposure to diverse perspectives. This, in turn, creates a reality that reflects our digital twin, reinforcing the simulation (Pariser, 2011). This cycle strengthens the control of the digital double, making it difficult to distinguish between the digitally constructed reality and the "actual" world. This manipulation by algorithms is what leads to the digital double shaping our actions.

To illustrate, consider a thought experiment: Imagine you have a perfect digital twin, capable of predicting your every action with 99% accuracy. This twin isn't just a passive observer; it has the power to influence your real-world experiences by subtly altering the information you receive. Now, imagine this twin begins to prioritize certain values, subtly steering your decisions to align with these preferences. You find yourself increasingly drawn to content and experiences that reinforce this "ideal" version of yourself, even if it deviates from your original desires. Eventually, the lines blur, and you become the embodiment of your digital twin.

The insights gleaned from these arguments highlight the danger of unchecked data collection and algorithmic control. Our digital twins are not merely passive reflections; they are active agents shaping our lives. This is particularly dangerous considering the ease with which they can be manipulated and weaponized. By understanding how data doubles operate, we can safeguard our autonomy and resist the subtle pressures of these digital constructs. As our digital and physical worlds continue to merge, it’s crucial to recognize the forces that seek to define us through data.

Practical applications of these ideas are numerous. For instance, consider targeted advertising. By leveraging our digital profiles, companies can deliver personalized marketing campaigns that exploit our vulnerabilities and biases. This influence extends beyond products; it can also impact our political views, social connections, and even our mental health. Recognizing the manipulative power of these algorithms is crucial for promoting digital literacy and critical thinking. Understanding that we can be targeted and manipulated by algorithms is the first step in addressing the challenges.

A common counterargument to this perspective is that individuals retain agency, and the influence of digital twins is overblown. However, this argument underestimates the subtlety and pervasiveness of algorithmic influence. While we may possess individual agency, the constant barrage of personalized content and the subtle nudges employed by algorithms erode our decision-making autonomy over time. This requires a constant effort to counter these external influences.

These ideas provide a framework for understanding how the digital landscape is transforming the very nature of reality and selfhood. The next section will analyze the economic forces driving these changes, exploring the rise of surveillance capitalism and its implications for individual autonomy and societal well-being, further contextualizing how data collection impacts our digital identities.

Algorithmic Puppets: Predictive Control in Action

The digital realm has become a fertile ground for predictive control, a landscape where algorithms don't just observe, but actively shape human behavior. This is not merely a matter of targeted advertising; it is a deeper philosophical question concerning the nature of autonomy, free will, and the extent to which our actions are becoming predictable commodities. The rise of sophisticated AI systems and data-driven models presents a compelling challenge to the traditional notions of individual agency.