Michel Foucault's Digital Panopticon: How AI & Social Media Became Our Prison

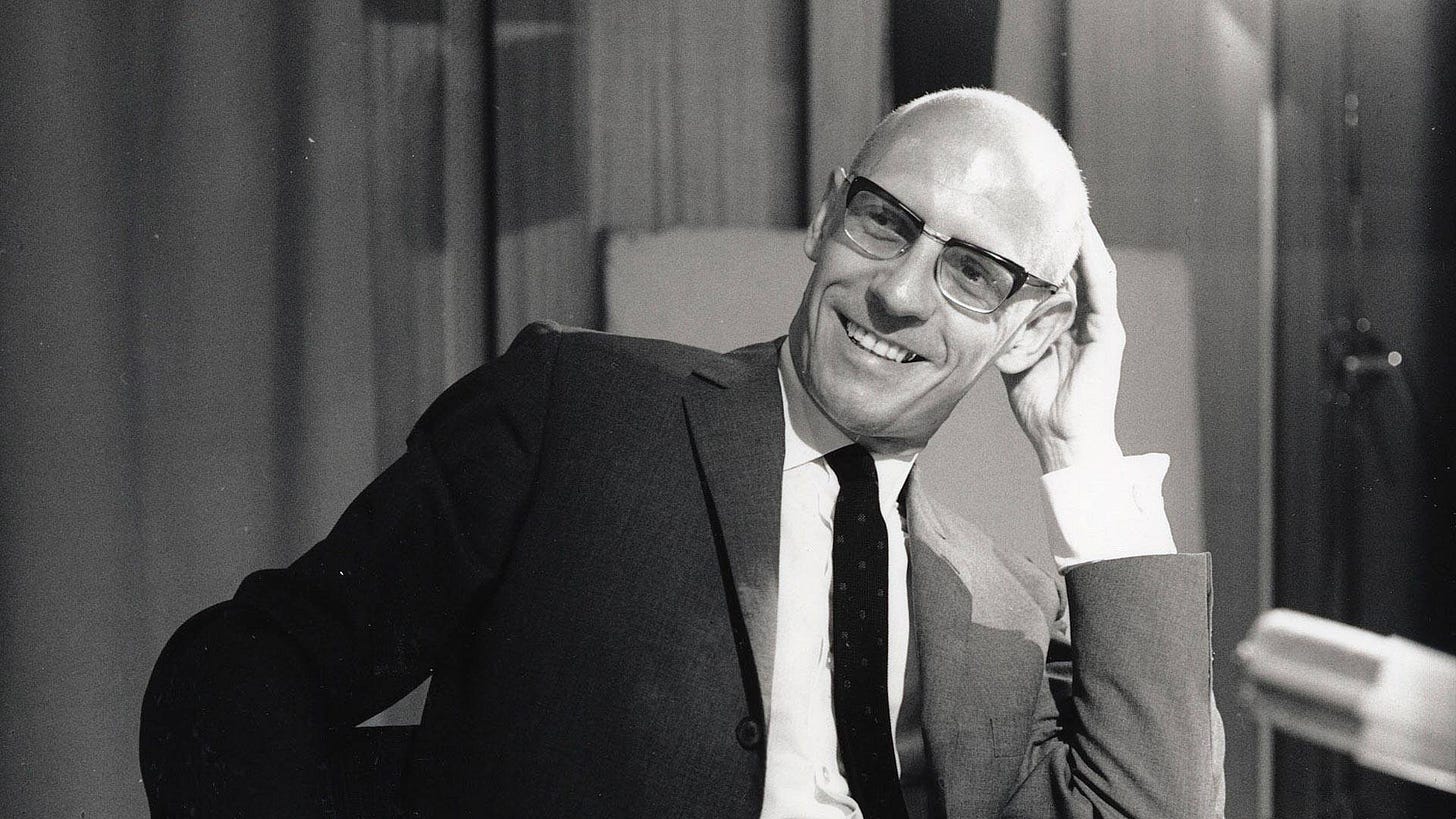

Have you ever deleted a comment online, not because it was factually incorrect, but because you worried about how it might be perceived? Or hesitated to "like" something, fearing judgment from your digital peers? This isn't just about online etiquette; it's a symptom of something much deeper. It's a feeling, a subtle coercion, born from the chilling analysis of a brilliant, yet unsettling, philosopher named Michel Foucault.

The Panopticon's Shadow: A Prison of the Mind

Michel Foucault, a French intellectual of immense influence, wasn’t interested in just the *what* of power, but the *how*. How does power operate? How does it shape us? He proposed a truly disturbing answer, one rooted in an architectural design: the Panopticon. Imagined by Jeremy Bentham in the 18th century, the Panopticon was a prison designed so that inmates could be observed without knowing when they were being watched. A central tower, with windows that were one-way mirrors, allowed a single guard to silently monitor all the cells. The prisoners, however, could never be certain if they were under surveillance.

The key here is not constant surveillance, but the *possibility* of it. This, Foucault argued, leads to self-discipline. The prisoners internalize the guard's gaze, modifying their behavior as if always under observation. They become their own jailers. This is how power, according to Foucault, truly works: it's not about brute force, but about control through the internalisation of the gaze.

"Power is everywhere; not because it embraces everything, but because it comes from everywhere." - Michel Foucault

From Prison Walls to the Algorithm: The Digital Panopticon Takes Shape

Now, fast forward to today. Consider social media, the internet, and artificial intelligence. Are we not, in a very real sense, living within a digital Panopticon? Think about it. Our every click, every search, every “like” is tracked, recorded, and analyzed. Algorithms, the invisible guards of this digital prison, constantly assess our behavior, predicting our desires, and shaping our choices.

But it's not just about data collection; it's about the *implications* of that data. The algorithms learn our preferences, our fears, and our vulnerabilities. They then feed us content designed to reinforce those very things, subtly manipulating our perceptions and, ultimately, our actions. We see personalized ads, targeted news feeds, and echo chambers designed to keep us engaged, and, crucially, *predictable*.

The Illusion of Freedom: Why We Internalize the Gaze

The beauty, or perhaps the horror, of the digital Panopticon lies in its subtlety. We're not being forced into cells. Instead, we’re given the illusion of freedom. We *choose* to participate, to share our thoughts, our feelings, our lives. But this choice is often guided by an unseen hand: the algorithm, the data miners, the marketers, and the social media platforms, all subtly shaping our desires and behavior. The result? We become the product. The algorithm knows what we'll do before we do.

Consider the impact of “likes” and “shares.” These are forms of social validation that drive us to curate our online personas. We tailor our content, crafting an image designed to garner approval. This isn’t just vanity; it's a survival mechanism in a world where online reputation matters. It's the modern version of the prisoner modifying their behavior in the face of the unknown gaze.

The Predictive Power: AI as the Ultimate Guard

The real game-changer is Artificial Intelligence. AI doesn't just observe; it *predicts*. It analyzes our behavior, anticipates our needs, and proactively offers us choices. This predictive power takes the digital Panopticon to a new level. It's no longer about reacting to our actions; it’s about shaping them *before* they even occur. We're not just being watched; we're being gently nudged, subtly guided, towards pre-determined outcomes.

Watch this video to get a deeper understanding:

Breaking Free: Resisting the Digital Panopticon

So, what can we do? Are we doomed to be forever trapped within this digital prison? Absolutely not. Awareness is the first step. Understanding the mechanics of the Digital Panopticon allows us to critically assess our online behavior.

Here are a few suggestions to begin the process of resistance:

Be mindful of your data footprint: Understand what information you share and with whom.

Question the algorithm: Don't blindly accept what you see online. Consider the source, the biases, and the motivations.

Cultivate critical thinking: Develop the ability to analyze information and form your own opinions, free from algorithmic manipulation.

Embrace diverse perspectives: Actively seek out information from a variety of sources to break free from echo chambers.

Prioritize real-world connection: Invest in face-to-face interactions and meaningful relationships outside of the digital realm.

These steps are not about abandoning technology; it’s about reclaiming our autonomy. It's about becoming conscious actors in our own lives, rather than passive recipients of algorithmic manipulation. The goal is to become less *predictable* and therefore, less controllable.

Unlock deeper insights with a 10% discount on the annual plan.

Support thoughtful analysis and join a growing community of readers committed to understanding the world through philosophy and reason.

Reclaiming the Gaze

Michel Foucault’s ideas, though born from philosophical inquiry, are shockingly relevant to the digital age. The Digital Panopticon, built on the foundations of AI, social media, and big data, offers a chilling, yet insightful, framework for understanding our current predicament. We are constantly under surveillance. We are internalizing the gaze of the algorithm. We are, in a sense, becoming our own jailers.

But just as the prisoner in the Panopticon could choose to resist, so too can we. By understanding the mechanics of this new form of power, and by actively resisting its influence, we can begin to reclaim our agency and our freedom. The fight for autonomy in the digital age is the fight to reclaim the gaze—to control how we are seen and, ultimately, how we see ourselves. It’s a fight we must all embrace.

I am a fan of Foucault and is great to see this article distilling some great points in connection to his famous metaphor …but I wonder if it gives too much power to AI (and technology as a whole) and not enough responsibility to us. Or of those behind the technology.

Yes, algorithms track, predict, and shape. But they do so in systems we built. The digital Panopticon didn’t appear overnight. It’s the logical continuation of something much older: our culture of optimization, performative identity, and what I call “productivity-as-worth”.

We weren’t free before AI. We were already living by scripts and prompts. I think in this age of AI, we will need a new theory of selfhood.

1-5 good points you have.

My friendships are intentionally inclusive of diverse opinions. Just as nature offers beauty in diversity, so do friends in their unique perspectives.

Rather than being right, it is far more fun to remain curious. As in, “tell me what you think” and then to explore our thoughts together, respectfully.